Who's on Top? Deterministic vs Generative Primacy

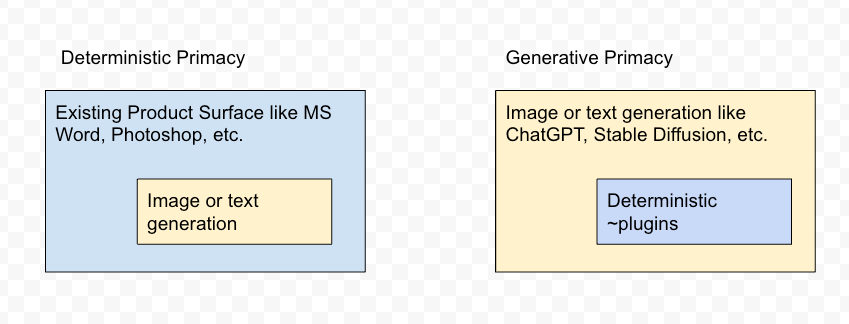

prompt, or tldr: A five paragraph essay about LLM primacy vs deterministic primacy . Ie chatGPT having plugins is LLM primacy. A text box in an app getting LLM autocomplete is deterministic primacy. It’s about whether the dominant UI is the open ended LLMs or the constrained, deterministic code. Like whether we give the LLM to a computer to use, or we give a computer to the LLM to use.

(The essay, generated and then edited by me):

As technology continues to advance, the debate over the dominant user interface (UI) in human-computer interaction intensifies. The central question revolves around whether the primary UI should be open-ended, as with large language models (LLMs) like ChatGPT, or constrained by deterministic code, as with autocomplete text boxes. This essay will explore the benefits and drawbacks of LLM primacy and deterministic primacy, ultimately arguing that a balanced approach is essential for effective human-computer interactions.

LLM primacy suggests that the dominant UI should be an open-ended large language model, capable of generating complex responses and adapting to users' needs. This approach allows for more fluid communication and creativity, as users can engage in a natural conversation with the computer. Additionally, LLMs can continuously learn and improve, which may lead to more accurate and useful responses over time. However, there are concerns regarding the unpredictability of LLMs, the potential for manipulation, and the ethical implications of replacing human interaction with AI-generated responses.

In contrast, deterministic primacy advocates for a UI governed by constrained, deterministic code, such as an autocomplete text box. This approach offers a higher degree of control and predictability, minimizing the risks associated with AI-generated content. Deterministic interfaces can be tailored to specific tasks or industries, ensuring that the technology is designed to meet users' needs effectively. However, this approach may limit the system's flexibility and adaptability, as it is confined by pre-determined rules and algorithms.

A balanced approach to human-computer interaction acknowledges the strengths and weaknesses of both LLM and deterministic primacy. Integrating elements of both models into the UI can enhance the user experience, enabling a more versatile and adaptive system. By leveraging the open-ended nature of LLMs, while still maintaining a degree of deterministic control, users can enjoy more fluid communication with technology that is tailored to specific needs.

But deterministic primacy is the ~old version. That’s what happens when you apply the new capability (e.g. LLMs) to the old apps. There’s lots of historical rhymes here. A notable one is Vaudeville and Cinema. Vaudeville, an entertainment format that featured a series of unrelated acts, eventually gave way to modern cinema, which offers a more cohesive and immersive storytelling experience. Similarly, deterministic primacy attempts to integrate the powerful capabilities of LLMs into pre-existing paradigms, which may limit their potential. While it offers predictability and control, deterministic primacy may restrict the dynamic and innovative capacities of LLMs, preventing them from achieving their full potential in human-computer interaction. Just as cinema transformed the world of entertainment by breaking free from the constraints of vaudeville, embracing the open-ended nature of LLMs may allow for more groundbreaking advances in technology and interaction.

There’s an implicit debate between LLM primacy and deterministic primacy. Same thing as whether generative AI will be a sustaining innovation or a disruptive one. Which primacy to use depends on your situation. By harnessing the strengths of both LLMs and deterministic code, we can develop more sophisticated and adaptable UIs, ultimately fostering a more seamless and effective relationship between humans and technology. The future of human-computer interaction lies not in choosing one model over the other, but rather in finding the optimal balance that will best serve users' needs and promote technological innovation.

The history of such transitions, however, is that the newer paradigm eventually wins out and subsumes the old. It just takes ~10 years or so of product and UI experimentation.