Prediction Market Replication Bounties

Science, especially psychology and social psychology, is in a replication crisis.

The replication crisis … is a methodological crisis in science in which scholars have found that the results of many scientific studies are difficult or impossible to replicate or reproduce on subsequent investigation, either by independent researchers or by the original researchers themselves.[1][2] The crisis has long-standing roots; the phrase was coined in the early 2010s[3] as part of a growing awareness of the problem.

Because the reproducibility of experiments is an essential part of the scientific method,[4] the inability to replicate the studies of others has potentially grave consequences for many fields of science in which significant theories are grounded on unreproducible experimental work.

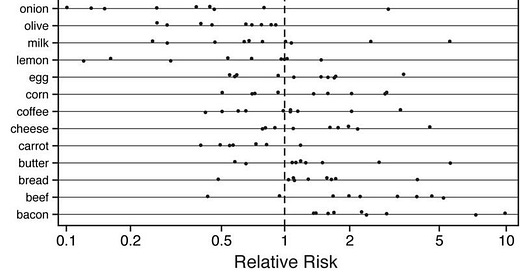

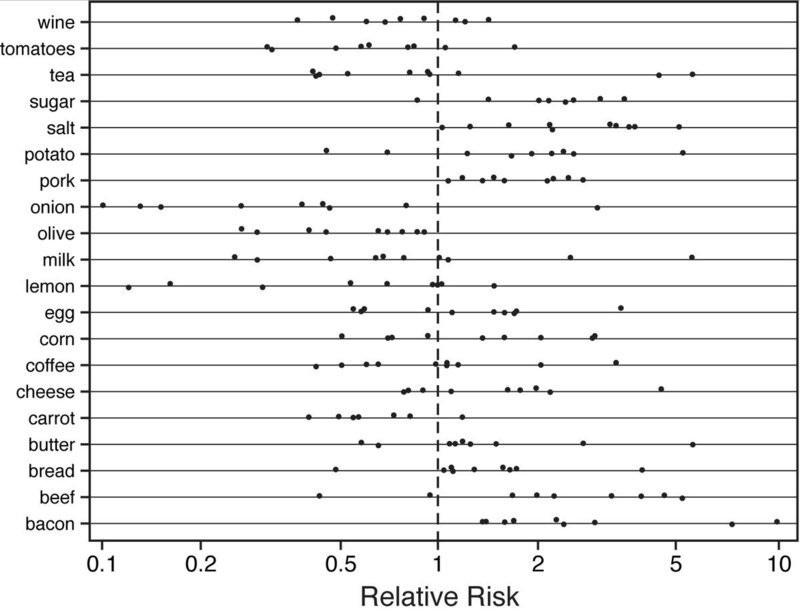

This doesn’t help the public’s confidence in science. Especially when most of the science the public reads about is more about what scares people than what’s true. Take, for example, all the different ways some foods apparently cause or prevent cancer:

So of course the public either picks whichever studies they want to hear or dismisses it all as bupkis. The incentives of science and science journalism are not quite the pursuit of truth. Many scientists do pursue truth out of an ethic, but the realpolitik is not so clean: Publish a lot of articles, get cited a lot. A science journalist’s job is not to report the (sometimes boring) truth, but to publish what gets people to read: Stuff that’s scary or surprising. Scary and/or surprising is not always true.

To scientists’ credit, they’re trying to reproduce stuff, and taking it very seriously. Just listen to this podcast by 2 prominent psychologists. The replication crisis is very personal to them. Much of their work or work they respected is now perhaps useless.

Prediction markets to the rescue?

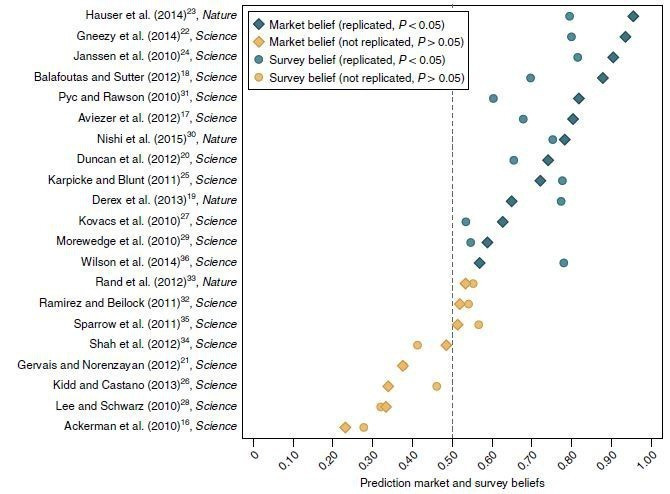

The Atlantic covered a very clever use of prediction markets. Scientists were asked to bet on which studies would replicate, and they were quite good at it!

(source)

Now use the market to incentivize replicating controversial studies

Create a prediction market for studies (read more here).

When a single “yes” vote is cast, nothing new happens.

When a matching “no” vote is cast, add to a bounty for the study in question.

Trying to replicate the study collects the bounty (whether it reproduces or not).

If and only if the original study replicates, the original author papers receive part of the bounty.

This would incentivize:

Attempting to replicate controversial studies (with a lot of bets for and against).

Incentivizing replication.

Incentivizing studies that do replicate.

Reproducing studies that most agree will replicate is not as incentivized as controversial studies.

Reproducing studies that most agree will not replicate is not as incentivized as controversial studies.

The knowledge that most think a study will or won’t replicate is still public, even though bounties are small, disincentivizing easy reproduction.

But money may not change scientific behavior. The incentives that created the reproducibility crisis are about prestige, standing, and academic survival. However, an active prediction market could make it clear which studies other scientists doubt. Or: the prediction market could bring doubt onto the sketchier studies. This could change the prestige incentives around publishing bad science by adding in the risk of public bets against your studies. It might even push scientists to publish their data and code in order to convince people their methods were sound.